The AI Revolution Just Hit Lightspeed: 9 Game-Changing Developments You Need to Know About

Let me break down these nine groundbreaking developments that dropped this week.

Whether you're a creator, developer, or just someone curious about where technology is headed, I promise you'll want to know about each one of these.

Trust me – some of these tools are so impressive, they made me question whether I was looking at AI output or human-created work.

1. Luma Ray2: When AI Video Gets Scary Good

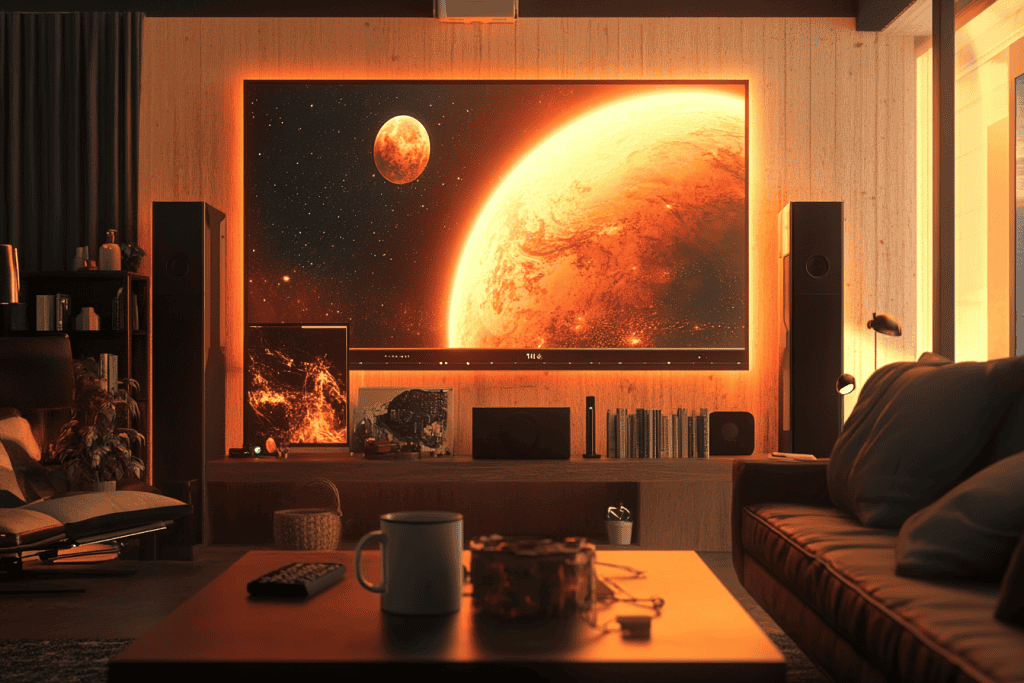

Remember when AI-generated videos looked like a glitchy fever dream? Those days are officially over. Luma's new Ray2 model just dropped, and I'll be honest – it made me do a double-take. Actually, make that a triple-take.

I spent hours testing Ray2 yesterday, and what struck me wasn't just the visual quality (though that's incredible) – it's the natural motion that feels almost impossibly smooth.

We're talking about videos where steam actually rises realistically from a perfectly cooked steak, where water orbs float through backlit forests with proper physics, and where human movements finally don't look like they're being performed by robots having a malfunction.

The technical leap here is massive – Luma scaled this model to 10x the computing power of its predecessor, and it shows. Whether you're creating cinematic scenes, close-up macro shots, or wild visual effects, the results are consistently impressive. I watched a generated clip of a planet exploding that looked like it belonged in a Hollywood blockbuster, not something conjured up by AI in minutes.

For creators, this is a game-changer. The barrier between imagination and visualization just got a lot thinner. And for everyone else? Well, let's just say we're going to need to have some serious conversations about what we can trust our eyes to tell us anymore.

2. ChatGPT Gets a Personal Assistant (How Meta Is That?)

Just when I thought ChatGPT couldn't get more useful, it basically said "hold my bytes" and introduced Tasks. And no, the irony of AI getting its own to-do list isn't lost on me.

Here's how I've been using it: I set up a weekly global news briefing (because who has time to read everything?), scheduled daily 15-minute workouts tailored to my "I-sit-at-a-desk-too-much" lifestyle.

The real magic isn't just in the scheduling – it's in the execution. Unlike my phone's reminders that I habitually swipe away, ChatGPT actually does the work. When it's time for my news briefing, it doesn't just ping me; it delivers a thoughtfully curated summary of the week's most important stories. When it's workout time, it doesn't just say "exercise now" – it provides a complete, personalized routine based on my previous conversations and preferences.

What's particularly clever is how it integrates with everything else ChatGPT can do. Need a weekly meal plan with grocery lists? Schedule it. Want regular writing prompts for your blog? Set it up. Looking for monthly budget reviews? Put it on autopilot. It's like having a personal assistant who also happens to be a polymath.

The best part? This is just the beta. It's currently rolling out to premium users, but OpenAI plans to eventually make it available to everyone with a ChatGPT account. As someone who's watched too many productivity tools come and go, this one feels different – because it's not just about remembering tasks, it's about actually completing them.

3. Runway Frames: Where AI Image Generation Gets Its Art School Degree

You know that feeling when you see something that makes you want to immediately drop everything and start creating? That's what happened when I first opened Runway Frames. And trust me, as someone who's tested every major image generator out there, it takes a lot to get me excited anymore.

What sets Frames apart isn't just its ability to create images – it's the unprecedented level of stylistic control it offers. Imagine having a master artist at your beck and call who perfectly understands your vision, but also happens to have an infinite palette of styles at their disposal. That's Frames.

I spent the last few days diving deep into its capabilities, and here's what blew me away: The preset styles aren't just your typical "make it look like Van Gogh" filters. They're more like complete artistic philosophies. I found myself creating images that ranged from hyper-realistic product photography to surrealist dreamscapes that would make Salvador Dalí raise an eyebrow.

But here's where it gets really interesting: Frames isn't just about following preset styles. It's designed to help you develop your own visual voice. Through their "Worlds of Frames" feature (which you can check out at runwayml.com/worlds-of-frames), you can explore different artistic directions and then adapt them to your own vision. It's like having access to an infinite mood board that you can actually use to create with.

For creators, this is a watershed moment. Whether you're a professional looking to streamline your workflow or an enthusiast wanting to experiment with new styles, Frames offers something that's been surprisingly rare in AI tools: genuine artistic freedom coupled with reliable consistency.

4. The Democratization of AI: When David Matches Goliath

Here's a story that made me spill my coffee: A team at UC Berkeley just achieved what tech giants spend millions trying to do – and they did it for less than the price of a new iPhone. Let that sink in.

The Sky-T1 model they've created matches the performance of industry leaders on reasoning and coding tasks. But here's the kicker – they trained it in just 19 hours using 8 GPUs, with a total cost of $450. That's not a typo. When I first read these numbers, I thought there must be a mistake. For context, training major AI models typically costs millions of dollars and takes weeks or months.

Berkeley proved that David can match Goliath

What makes these developments so exciting isn't just the technical achievements – it's what they represent. We're entering an era where groundbreaking AI research isn't limited to tech giants with bottomless budgets. A small team with a good idea and some GPUs can now compete with the biggest names in the industry.

5. Lightning Strikes: MiniMax-01 Rewrites the Rules of AI Attention

Just when I thought I had a handle on this week's AI developments, Hailou dropped a technical bombshell that's making even seasoned AI researchers do a double-take. Their new MiniMax-01 model isn't just another language model – it's completely rethinking how AI processes information.

Let me break this down in a way that won't make your eyes glaze over: Traditional AI models are like students trying to remember everything from a 100-page textbook at once. MiniMax-01, with its new Lightning Attention architecture, is more like a student who knows exactly which page to flip to for any given question. And it can handle a textbook 20-32 times longer than what current leading models can process.

The numbers here are staggering. We're talking about a 4M token context window – in human terms, that's like being able to read, understand, and reference an entire novel in one go. But what really caught my attention was their perfect score on the needle-in-a-haystack retrieval task. Imagine finding exactly the right quote from a book without using ctrl+F – that's essentially what MiniMax-01 can do, but with perfect accuracy.

Here's where it gets really interesting: They've achieved this while keeping costs surprisingly low. At $0.2 per million input tokens and $1.1 per million output tokens, they're offering Ferrari performance at Toyota prices. For developers and businesses building AI applications, this is like finding a golden ticket.

What makes this particularly exciting is that it's open source. Anyone can access it through GitHub or Hugging Face, experiment with it, and build upon it. In a field often criticized for being too closed-off, this level of openness is refreshing.

6. When AI Gets Down to Business: Materials Science

While everyone's been obsessing over AI-generated cat pictures (guilty as charged), Microsoft quietly dropped something that could literally change what our world is made of.

MatterGen, their new AI model, is like having thousands of materials scientists working around the clock, discovering new compounds for everything from better solar cells to CO2 recycling.

As someone who once failed spectacularly at high school chemistry, I find this particularly exciting. Instead of scientists spending years in labs testing endless combinations of materials, MatterGen can predict which combinations will work best for specific needs. It's like having a cheat code for materials science.

7. Your Voice, But Not Really: MiniMax's T2A-01-HD Changes How We Think About Speech

Remember when voice cloning was either expensive, complicated, or just plain bad? MiniMax just shattered that status quo with their T2A-01-HD model, and I'm still trying to process how impressive this is.

I'll share a slightly unnerving experience: I tested the system with a 10-second clip of my morning coffee review. Five minutes later, I was listening to "myself" fluently discussing brewing techniques in Japanese – with all my usual vocal quirks and inflections intact. The uncanny part? The emotional nuances carried over perfectly. My typical enthusiasm for coffee came through clearly, regardless of the language.

But this isn't just about voice cloning. T2A-01-HD comes packed with a library of over 300 pre-built voices, each meticulously categorized by language, gender, accent, age, and style. Think of it as having access to a virtual voice acting studio, complete with professional-grade effects like room acoustics and telephone filters.

The language support is staggering: 17+ languages, including multiple variants of English (US, UK, Australian, Indian), Chinese (both Mandarin and Cantonese), and pretty much every major language you can think of. Each comes with regionally authentic accents – no more robotic translations that make native speakers cringe.

For content creators, podcasters, and businesses, this is a game-changer. Need to localize your content for multiple markets? Want to experiment with different voice styles for your project? Looking to create consistent voice-overs at scale? T2A-01-HD handles it all, and the best part is you can try it for free through their platform.

The implications here are both exciting and slightly daunting. We're entering an era where the line between real and synthesized voice is becoming increasingly blurred. As someone who works with audio content, I'm fascinated by the possibilities – and mindful of the responsibilities that come with such powerful technology.

8. Codestral 25.01: When Your AI Coding Buddy Gets a Speed Boost

As someone who spends way too much time staring at code, Mistral AI's latest announcement hit different. Their new Codestral 25.01 isn't just another coding assistant – it's like they strapped a rocket to their already impressive code generation engine.

Let me paint you a picture: Yesterday, I was working on a particularly stubborn piece of JavaScript (aren't they all?), and I decided to give Codestral 25.01 a test drive through the Continue extension in VS Code. The difference was immediate and, frankly, a bit shocking. What used to take multiple back-and-forth iterations now happened in essentially real-time. We're talking about code completion and generation that's roughly twice as fast as its predecessor.

But raw speed isn't the whole story here. What really impressed me was the quality of the code being generated. Usually, when you optimize for speed, something has to give – but Codestral 25.01 somehow manages to maintain (and in some cases improve) code quality while working at hyperspeed.

The best part? This isn't locked behind some expensive paywall. Anyone can access it right now by simply installing the Continue extension in VS Code. In a world where every new dev tool seems to come with a subscription model, this kind of accessibility is refreshing.

For developers, this means less time waiting for suggestions, fewer context switches, and more time actually solving problems. It's like having a senior developer looking over your shoulder, but one who can type twice as fast and never gets tired of helping.

9. Krea 3D: When 2D Just Isn't Enough Anymore

Just when I thought I'd seen all the AI surprises for the week, Krea dropped a bombshell that had me grinning like a kid in a toy store. They've launched a free feature that turns regular images into 3D objects – and not in that janky, "well, I guess that's kind of 3D" way we've seen before.

The best part? This isn't locked behind some expensive paywall or limited to professional 3D artists. Krea has made it free for everyone. Yes, free. In a world where every new AI tool seems to come with a premium subscription, this is refreshingly accessible.

For creators, this opens up a whole new world of possibilities. Product photographers can create virtual showcases without physical products. Game developers can rapidly prototype assets. Social media creators can add an extra dimension to their content without needing a degree in 3D modeling.

And for the rest of us? Well, let's just say I've already spent more time than I'd care to admit turning random objects in my house into 3D models and placing them in increasingly ridiculous scenarios. It's not just a tool – it's a playground for your imagination.

The Future Just Arrived (And It's Moving Faster Than We Thought)

As I sit here wrapping up this whirlwind tour of the last week in AI, I can't help but feel we're witnessing a pivotal moment in technology. This isn't just about individual breakthroughs anymore – it's about how these technologies are converging to create something entirely new.

Think about it: In just one week, we've seen AI that can create Hollywood-quality videos (Luma Ray2), manage our lives (ChatGPT Tasks), generate art with unprecedented control (Runway Frames), process entire books' worth of information (MiniMax-01), discover new materials (MatterGen), clone voices across languages (T2A-01-HD), code at twice the speed (Codestral), and turn flat images into 3D objects (Krea) – all while becoming more accessible and affordable than ever before.

What strikes me most isn't just the technical achievements, but how democratized these tools have become. When a Berkeley team can match industry leaders with $450 worth of computing power, and when sophisticated AI tools are being released for free, we're entering a new era of innovation where good ideas matter more than deep pockets.

For creators, developers, and businesses, the message is clear: The future isn't coming – it's here. The question isn't whether to adapt, but how quickly you can integrate these tools into your workflow. Because if this week is any indication, the pace of innovation isn't slowing down – it's accelerating.

As I look ahead, I can't help but wonder: If this is what one week in AI looks like in 2025, what will we be talking about by the end of the year? Whatever it is, I have a feeling we're going to need more coffee.

Stay curious, stay creative, and keep watching this space. The revolution isn't just being televised – it's being AI-generated, voice-cloned, 3D-rendered, and coded at twice the speed.

About The Author

Carrie's ability to adapt and innovate with AI tools has transformed her creative process, making her an invaluable voice at Easy AI Beginner.